During an incident response, you are usually not lucky enough to have months of full packet capture or forensic images of machines. That is not a problem though, as there are artifacts for use in analysis throughout your network. I'm speaking specifically about log files. The two data sources in particular that can be most useful are Web Server and Proxy logs.

If you think about most common attack patterns, they are either attacks on poorly configured web sites or phishing/malware downloads. If you are using a proxy server, you will see this phishing traffic, and the web server logs (unless compromised) will have traces of the attack.

Web Server

In a typical web server attack you will see traffic from reconnaissance, attack, and exploit. Reconnaissance traffic will usually be discoverable in log files by looking at the total number of pages viewed by distinct IP. If you are seeing lots of quick, sequential access to pages you can make an assumption it is a scan.

Attack detection could include looking for SQLInjection and other escape characters in post variables. Using pcre would be a quick way to find that data . These are two I use often:

'drop|delete|truncate|update|insert|select|declare|union|create|concat''((%3D)|(=))[^]*((%27)|(')|(--)|(%3B)|(;))|w*((%27)|('))((%6F)|o|(%4F))((%72)|r|(%52))'

In this scenario, let's just say we see scanning and attacking, and now we will look for the exploit. What you will want to look for is pages that have been targeted for SQLi or other misconfigurations. Many hits will be seen in the file while under attack and suddenly the bytes per page will increase. The bytes will be low or consistent when the attacker is trying to compromise the site. Suddenly the bytes on the page as shown in the log file will increase. This is usually the compromise being successful.

Now we need to look for traces of the exploit. A common method is to upload a new page which allows for remote shell so the attacker can install new code on the site or pivot inside of the network. Often times you will see a page being uploaded, and it being accessed, then quickly deleted. You will not easily see the page on the file system as it has been deleted. But a trail will be left of access on the web server logs. To discover this you can create a list of files that are supposed to be on the web server and diff them with files accessed in the web server log. I do this with a Python script to speed up the process.

Beyond attack detection, looking through logfiles can help determine if there is a long standing compromise. Looking at pages by bytes uploaded or downloaded. Should the site allow uploads? Should a single page have gigabytes of data being downloaded? This could be a sign of a tunnel being used to exfiltrate data.

Proxy Servers

Proxy servers are a wealth of information for incident response. You can perform very similar analysis as with web server logs but also gain visibility to beaconing and look for signs of traffic to known malicious sites. An example could be a spear phishing attack was used on your business. You know that in the email it has a link to badsite.com/malware.exe. You have responded to the threat by alerting staff not to click on the link. But that was after some users may have checked their email. You can search for the URL in your proxy log and it will show the internal IP of the host that downloaded it.

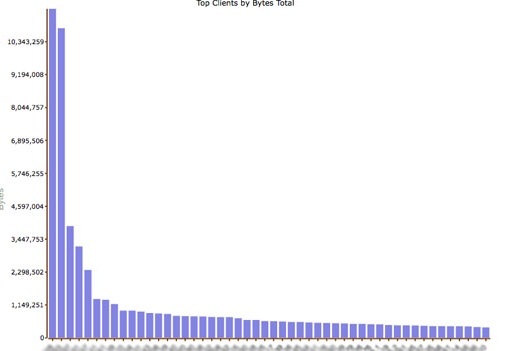

Also, looking at the log files you can see if internal hosts have been compromised and are being used as tunnels by looking at total data transfered. And by looking at the number of hosts contacted by an individual host you can see if it is being used for scanning activities.

I find it easier to work with the data if I visualize it, you can sort the log files and pull them in to Excel.

Also you may want to use the Google Maps API to see where your traffic is coming from and determine if it is expected.

Be the first to reply!

Reply

Login to the community

No account yet? Create an account

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.