Is it possible to exclude certain folders from scanning?

Thank!

Page 7 / 8

Hi Kit

How are you doing? Hope that you are well?

Many thanks for the explanation...that does indeed clarify things further.:D

Regards

Baldrick

How are you doing? Hope that you are well?

Many thanks for the explanation...that does indeed clarify things further.:D

Regards

Baldrick

That should be the detailed description of the official product! Great and informative!

Thank you.

Thank you.

Kit, thank you very much for the time you've given on this topic...especially given the detail and depth provided. The absolute first thing that I always do is exclude all of my applications from the realtime monitors, then those paths/folders suggested by Microsoft and my software vendors. I have several large companies who only have one thing in common- they all have a particular inventory system on their terminal servers. For example, this month we simply attempted to move from Bitdefender to Webroot...that's all....and the software vendors are claiming that Webroot is causing various problems. The executables are excluded already (from day1). So, as per your write-up, we can safely assume there is nothing more we can do since it's all pointless anyway, and Webroot knows everything already and therefore explicitly excluding our dataset would we a waste of time, etc.

As such, I don't think it's necessary to waste anyone's time on this any further. I think that all of the enterprise/corportate users in the biz forum should read your posts Kit, as it was very enlightening for me. It lets us know that the only thing we can do is exclude the apps...the data is already ignored by default, as WR knows all.

There are other problems I've been experiencing that simply won't work well with this sort of approach. There are commercial applications that update themselves very regularly (at least once a day) in much the same way that an AV product does. Often the application itself is updated as well. (sometimes to the tune of many binaries). In the end, it is a solution that is needed, not a dissertation, therefore, we'll see what support can offer and for now we've already moved our servers over to ESET and the problems we were having have subsided. Problem solved. Thanks again to all, and I'd suggest directing the security experts and software developers that can't get it to work (see THIS TOPIC in the biz forum to the wealth of information disclosed here on the topic...

As such, I don't think it's necessary to waste anyone's time on this any further. I think that all of the enterprise/corportate users in the biz forum should read your posts Kit, as it was very enlightening for me. It lets us know that the only thing we can do is exclude the apps...the data is already ignored by default, as WR knows all.

There are other problems I've been experiencing that simply won't work well with this sort of approach. There are commercial applications that update themselves very regularly (at least once a day) in much the same way that an AV product does. Often the application itself is updated as well. (sometimes to the tune of many binaries). In the end, it is a solution that is needed, not a dissertation, therefore, we'll see what support can offer and for now we've already moved our servers over to ESET and the problems we were having have subsided. Problem solved. Thanks again to all, and I'd suggest directing the security experts and software developers that can't get it to work (see THIS TOPIC in the biz forum to the wealth of information disclosed here on the topic...

What I'm pointing out is that the problem is not what people think the problem is. I am not saying the problem is not there. I am saying there's no need to call for amputating the leg when there's a splinter in the finger, so to speak.

Contact with support gets threat research on the task and things are handled within a few minutes to a few hours (usually on the <30 minutes side).

I also specifically refrained from mentioning it before due to the length of the prior post, but threat research has the ability to help with applications that update themselves daily or even hourly. (Bear in mind, application data updates don't cause a problem. Only when applications have programming updates. But that is common enough too.) At least they did when I was working there. They can make special rules specifically for a company that affect that company only. So effectively, they create the effect of "ignoring" the executable folder for the target application. Yes, this technically opens up the potential for a dropper to target that folder, but two things factor in: The first being that hard positives are still detected, so known malware is still caught even in the "excluded" folder. The second being that it simply stops monitoring them as Unknowns within the scope of the company in question, so if something or somebody tried to use that to get a false negative (get a virus marked as good), it wouldn't work.

As for "That Topic" that you are fond of pointing out...

The very first requestor is talking about DB files (not-affected and Webroot doesn't lock files while scanning them anyway), server shares (Not-affected as Webroot doesn't scan them unless explicitely instructed to anyway), and files executed from server shares (Yes, they are scanned when they are executed, but not locked, so there is no performance issue). So far every single post on that thread are from people who do not understand how Webroot operates and are calling for solutions based on the operation methods of other antivirus software. It's like people who have never seen a motorcycle before complaining that their motorcycle needs two more wheels on it and a steering wheel and gas pedal and brake pedal because "cars need to have those things!"

The proper solution is not "Spend hours deploying something else". The proper solution is "Spend seven minutes contacting Webroot Enterprise Support, then have it fixed within half an hour usually, and definitely faster than it would take to deply something else." As a support person yourself, you should know that you should describe symptoms and ask for the appropriate solution, not prescribe your own solution. You are an expert at the software you work with and the things you do. Others are experts at Webroot software.

Remember: Those seven minutes you didn't want to spend to get a proper solution in this case are leaving you running something that won't detect a new virus for several days or more (and statisticaly won't detect 55% of all the malware out there).

I'm sure I'm out of my League but I'm learning a lot here and you sure you want to be Retired @ ?

Moved on to Bigger and Better Things . ^.^ Talk to Webroot if you want them to try to recruit me back. XD

. ^.^ Talk to Webroot if you want them to try to recruit me back. XD

. ^.^ Talk to Webroot if you want them to try to recruit me back. XD

. ^.^ Talk to Webroot if you want them to try to recruit me back. XD

Whoa Sounds like I wish i would...could...should of if I could! Happy to have you with us anyways @

That was worded quite nicely @ . It's a shame to see you go. All the best!

During operations on large files Webroot contributes a significant portion of the CPU overhead. If the customer believes these particular files are safe they should have the option to exclude them from protection and un-needed overhead.

Hi boyeld

There are a lot of users who agree with the result that there is a Feature Request open (see HERE) asking for this. It is currently available in the Endpoint Security/Business version and therefore we are hopeful that it will be implemented in the Home/Consumer version at this time soon.

Regards, Baldrick

There are a lot of users who agree with the result that there is a Feature Request open (see HERE) asking for this. It is currently available in the Endpoint Security/Business version and therefore we are hopeful that it will be implemented in the Home/Consumer version at this time soon.

Regards, Baldrick

As the OP, I would like to note that it took Webroot 5 years to reach this conclusion.

I do not think that anyone would dispute this observation. ;)

On a personal note...it is my understanding that Webroot have a roadmap/plan forwhat they will introduce into the product...and we just have to assume that this has not figured highly in those plans, most likely because their top aim is to keep up the protection levels of WSA.

I, again personally, would prefer top notch protection/less features rather than more feature/potentially lesser protection...but as I said...that is just my personal preference. :D

On a personal note...it is my understanding that Webroot have a roadmap/plan forwhat they will introduce into the product...and we just have to assume that this has not figured highly in those plans, most likely because their top aim is to keep up the protection levels of WSA.

I, again personally, would prefer top notch protection/less features rather than more feature/potentially lesser protection...but as I said...that is just my personal preference. :D

Normally it doesn't though.@ wrote:

During operations on large files Webroot contributes a significant portion of the CPU overhead. If the customer believes these particular files are safe they should have the option to exclude them from protection and un-needed overhead.

I can perform operations on 280GB raw video files without a peep from Webroot.

So if it is, then something else is wrong that should be fixed.

Otherwise it's like cutting off your nose because it itches. The side effects suck.

So if you're encountering that and it's not an exercise in theoretical questioning, contact support and they'll be happy to help you resolve it.

As for the further portion of the thread...

Businesses are under a "YODF" style contract when they exclude a directory. They fully understand and generally have competent legal teams that will say "Yeah, we did that, our bad." if they screw up by doing that. They have professionals who are able to calculate the risk and benefit balance.

Individuals don't have professionals. They'll happily follow directions from a web site to turn off their AV or "exclude this folder" so they can see this "cool video of a kitten" their friend definitely sent them. They aren't able to understand YODF as well, and so they just don't get a stick to hurt themselves with. Remember: As long as it's a feature request that's not implemented, it's not implemented, and that's the way it should be.

And this "we know better than you are what's best for you" approach is the reason I moved from Webroot to another solution.

These days users that are likely to download an .exe from their email to see the kitten are protected by either Windows Defender or whatever their corporate IT installs. Neither is the target of this product. Moreover, any online instruction will tell them to disable the AV alltogether (I assume Webroot still allows that) rather than excluding a folder.

So what Webroot is left is people who know what they are doing. And they choose to keep telling power users - "Yeah this feature that every other AV has, trust us. It's bad for you. We know better."

It's an insulting attitude, but utlimately it's Webroot's choice. Just as my choice is to go with a solution that gives me and my customers full control over what AV does and does not do.

These days users that are likely to download an .exe from their email to see the kitten are protected by either Windows Defender or whatever their corporate IT installs. Neither is the target of this product. Moreover, any online instruction will tell them to disable the AV alltogether (I assume Webroot still allows that) rather than excluding a folder.

So what Webroot is left is people who know what they are doing. And they choose to keep telling power users - "Yeah this feature that every other AV has, trust us. It's bad for you. We know better."

It's an insulting attitude, but utlimately it's Webroot's choice. Just as my choice is to go with a solution that gives me and my customers full control over what AV does and does not do.

If you don't use Webroot for yourself or your customers,. why are you here?

You can unsubscribe from the thread if the notifications drew you back. Head to https://community.webroot.com/t5/user/myprofilepage/tab/user-subscriptions and check the ones that you want to remove, then use the dropdown link above the list to unsubscribe from them.

In any case, a good number of very common droppers will probe for ignored directories. It's not at all uncommon for me to find the malware sitting in directories of games, video files, or other things that have been set to excluded in the other AV.

Lack of exclusion isn't just a "we know better than you" situation. It's an enforced "this is best practice" situation to reduce problems for Webroot customers. For every problem that could be solved by an exclusion, there are dozens caused by the same exclusions. So it's better to solve a problem properly than to disable protection, and when I worked there, we were well aware that some folks with a desperate need for control would end up making exclusions and then getting infected and costing more than their subscription in support agent salary to fix it.

Webroot: "It shouldn't be slowing down access to that large file. Let's fix it so things are protected and fast."

Others: "Oh, yeah, it'll always slow it down. So you have to turn off protection to fix it."

You can unsubscribe from the thread if the notifications drew you back. Head to https://community.webroot.com/t5/user/myprofilepage/tab/user-subscriptions and check the ones that you want to remove, then use the dropdown link above the list to unsubscribe from them.

In any case, a good number of very common droppers will probe for ignored directories. It's not at all uncommon for me to find the malware sitting in directories of games, video files, or other things that have been set to excluded in the other AV.

Lack of exclusion isn't just a "we know better than you" situation. It's an enforced "this is best practice" situation to reduce problems for Webroot customers. For every problem that could be solved by an exclusion, there are dozens caused by the same exclusions. So it's better to solve a problem properly than to disable protection, and when I worked there, we were well aware that some folks with a desperate need for control would end up making exclusions and then getting infected and costing more than their subscription in support agent salary to fix it.

Webroot: "It shouldn't be slowing down access to that large file. Let's fix it so things are protected and fast."

Others: "Oh, yeah, it'll always slow it down. So you have to turn off protection to fix it."

You are missing the point. Your or Webroot's best practice is not necessarily the best for everyone. And at least some of your competitors recognize it.

But you are right. I was drawn here by subscription. And since it seems another of Webroots best practices is to shoo away former clients who tell you the reason they left, I will refrain from further posting.

But you are right. I was drawn here by subscription. And since it seems another of Webroots best practices is to shoo away former clients who tell you the reason they left, I will refrain from further posting.

In any case, it still stands that if a large file being accessed is slowing down because of Webroot (Please bear in mind that Webroot "consuming CPU cycles" may not actually be slowing anything down in many cases, since in a lot of low-threat situations it will only consume otherwise-unused CPU cycles), this should not be happening and the official support team will be very happy to help remedy that without forcing you to reduce your protection by turning it off.

“When we designed SecureAnywhere, we did so with common conflicts and performance problems in mind, so file/directory exclusion isn’t necessary.”

"When we develop bridges, we do so with common loads and problems in mind, so the beams can be half the size of the competitor's bridges"

Perhaps unfair to make fun a marketing fluff, but still. I am "uncommon" in that I use visual studio to develop applications which means that I am running a program that produces a program. Sometimes visual studio is unable to write the new program to disk because the older version is being inspected by the anti-virus.

On a more common note, I just had a pop-up from outlook about an email attachment that was "open by another process". The attachment was a word document I had written the night before and the other process could in theory be some virus that snuck past webroot, but my suspicion is the webroot was inspecting it.

I sense a narrow focus in which computer security is the theme, but a computer is a tool that still has to work. My recommendation would be a "webroot-professional" for people who are not simply engaged in writing memos or playing games.

"When we develop bridges, we do so with common loads and problems in mind, so the beams can be half the size of the competitor's bridges"

Perhaps unfair to make fun a marketing fluff, but still. I am "uncommon" in that I use visual studio to develop applications which means that I am running a program that produces a program. Sometimes visual studio is unable to write the new program to disk because the older version is being inspected by the anti-virus.

On a more common note, I just had a pop-up from outlook about an email attachment that was "open by another process". The attachment was a word document I had written the night before and the other process could in theory be some virus that snuck past webroot, but my suspicion is the webroot was inspecting it.

I sense a narrow focus in which computer security is the theme, but a computer is a tool that still has to work. My recommendation would be a "webroot-professional" for people who are not simply engaged in writing memos or playing games.

Hi JeffKellyWelcome to the Community Forums.Folder exclusion is already included in the Endpoint Security (Business) version, and we believe that it is on the roadmap for the Home (Consumer) version, but we do not have formal confirmation of this or when such an inclusion may occur.Regards, Baldrick

Be careful about thinking it's "Just Marketing" when there's a tech person involved who understands kernel drivers.

You are anything but "uncommon" in the sense of that. What is "Common" in that wording is much more meta than simple coding. When I worked at Webroot, coders made up a surprisingly large amount of our user base based on the presence count of compilers on Webroot protected systems. (It's literally a number, mind you, so don't go shouting privacy foul. We could say we want to know how many systems a specific compiler is on, and it would give us nothing but a count. We -must- know the hash of the compiler executable in order to look it up as well, so random files cannot be found.)

"Sometimes visual studio is unable to write the new program to disk because the older version is being inspected by the anti-virus."

" I just had a pop-up from outlook about an email attachment that was "open by another process". The attachment was a word document I had written the night before and the other process could in theory be some virus that snuck past webroot, but my suspicion is the webroot was inspecting it. "

Great news! It's not Webroot inspecting. Webroot doesn't do blocking I/O. It does not open files for exclusive access when just scanning. Have you checked whether you have Windows Defender running? It does.

As a coder, you should be familiar with stream (including file) open commands. You pick the file, you pick the mode, you get a handle.

In Visual Studio, unless you're writing multi-hundred-megabyte files, Webroot is generally finished scanning it in less than a fraction of a second. You can easily see its actions in Procmon. That being said, "generally" is an operative term. After all, some people have extra factors that impact I/O performance for example. In any case, Webroot gets a file handle, yes, but it's a non-exclusive, non-blocking file handle.

So even if something is driving your IOPS down the drain and Webroot is going to take, say, twenty seconds to scan, you can still modify the file, overwrite the file, or delete the file and Webroot will not get in the way -unless- Webroot has decided that the file matches the specifications of something highly likely to be malicious. Mind you, if it's taking Webroot twenty seconds to scan it, it would also take you that or more to write it just in I/O lag, but that's beside the point.

"'...highly likely to be malicious?' So it CAN get in the way!" Yep. But solving this is properly done by either looking into the fact that it's a false "suspicious" or not coding in a way that is highly suspicious. The only times I've had Webroot block things when compiling was when I was actually trying to make something highly suspicious or potentially malicious for testing purposes.

The "Common" conflicts: Blocking I/O. So your development is perfectly within that common behavior. Did you know that thumbnail generation in Explorer will block more than Webroot does on email attachments?

Take a look with procmon sometime. While I don't rule out bugs (heck, I worked to handle them when I worked there), I think you'll find that it's not Webroot getting in the way.

Since we both like similies:

"Every few weeks when I walk in my back door, there's a high pitched sound and the door has a hard time closing the last few inches. So I want to take the security sensor off it. Couldn't possibly be the hinge."

You are anything but "uncommon" in the sense of that. What is "Common" in that wording is much more meta than simple coding. When I worked at Webroot, coders made up a surprisingly large amount of our user base based on the presence count of compilers on Webroot protected systems. (It's literally a number, mind you, so don't go shouting privacy foul. We could say we want to know how many systems a specific compiler is on, and it would give us nothing but a count. We -must- know the hash of the compiler executable in order to look it up as well, so random files cannot be found.)

"Sometimes visual studio is unable to write the new program to disk because the older version is being inspected by the anti-virus."

" I just had a pop-up from outlook about an email attachment that was "open by another process". The attachment was a word document I had written the night before and the other process could in theory be some virus that snuck past webroot, but my suspicion is the webroot was inspecting it. "

Great news! It's not Webroot inspecting. Webroot doesn't do blocking I/O. It does not open files for exclusive access when just scanning. Have you checked whether you have Windows Defender running? It does.

As a coder, you should be familiar with stream (including file) open commands. You pick the file, you pick the mode, you get a handle.

In Visual Studio, unless you're writing multi-hundred-megabyte files, Webroot is generally finished scanning it in less than a fraction of a second. You can easily see its actions in Procmon. That being said, "generally" is an operative term. After all, some people have extra factors that impact I/O performance for example. In any case, Webroot gets a file handle, yes, but it's a non-exclusive, non-blocking file handle.

So even if something is driving your IOPS down the drain and Webroot is going to take, say, twenty seconds to scan, you can still modify the file, overwrite the file, or delete the file and Webroot will not get in the way -unless- Webroot has decided that the file matches the specifications of something highly likely to be malicious. Mind you, if it's taking Webroot twenty seconds to scan it, it would also take you that or more to write it just in I/O lag, but that's beside the point.

"'...highly likely to be malicious?' So it CAN get in the way!" Yep. But solving this is properly done by either looking into the fact that it's a false "suspicious" or not coding in a way that is highly suspicious. The only times I've had Webroot block things when compiling was when I was actually trying to make something highly suspicious or potentially malicious for testing purposes.

The "Common" conflicts: Blocking I/O. So your development is perfectly within that common behavior. Did you know that thumbnail generation in Explorer will block more than Webroot does on email attachments?

Take a look with procmon sometime. While I don't rule out bugs (heck, I worked to handle them when I worked there), I think you'll find that it's not Webroot getting in the way.

Since we both like similies:

"Every few weeks when I walk in my back door, there's a high pitched sound and the door has a hard time closing the last few inches. So I want to take the security sensor off it. Couldn't possibly be the hinge."

Here is Google's "strong" advice re. installation of its G Suite syncing software, to permit MS Outlook users to sync their pst files with G Suite email, etc.:

"Dear G Suite Sync user,

Thanks for installing G Suite Sync for Microsoft Outlook . This software will synchronize your calendar, contacts, email, notes, tasks and domain's global address list with G Suite. Before you get started, there are a few things you should know about the current version of G Suite:

. This software will synchronize your calendar, contacts, email, notes, tasks and domain's global address list with G Suite. Before you get started, there are a few things you should know about the current version of G Suite:

• Your journal entries will not synchronize with G Suite.

• G Suite Sync will initially download up to 1GB of email from the G Suite Server to your desktop. You can change this setting from the system tray menu. (learn more).

• Your initial sync can take a long time, because there's a lot of email to download. To see your synchronization status, look at synchronization status in your system tray.

• We strongly recommend that you create an exclude rule in your antivirus software so that it does not scan any files located under %LOCALAPPDATA%GoogleGoogle Apps Sync.

For more information, go here:

• G Suite Sync User Manual

• What's different and won't sync between Microsoft Outlook and G Suite

and G Suite

• Read the Frequently Asked Questions

• Tools for administrators deploying G Suite Sync

• How to get help

Thanks for using G Suite Sync,

The G Suite Sync Team"

"Dear G Suite Sync user,

Thanks for installing G Suite Sync for Microsoft Outlook

. This software will synchronize your calendar, contacts, email, notes, tasks and domain's global address list with G Suite. Before you get started, there are a few things you should know about the current version of G Suite:

. This software will synchronize your calendar, contacts, email, notes, tasks and domain's global address list with G Suite. Before you get started, there are a few things you should know about the current version of G Suite:• Your journal entries will not synchronize with G Suite.

• G Suite Sync will initially download up to 1GB of email from the G Suite Server to your desktop. You can change this setting from the system tray menu. (learn more).

• Your initial sync can take a long time, because there's a lot of email to download. To see your synchronization status, look at synchronization status in your system tray.

• We strongly recommend that you create an exclude rule in your antivirus software so that it does not scan any files located under %LOCALAPPDATA%GoogleGoogle Apps Sync.

For more information, go here:

• G Suite Sync User Manual

• What's different and won't sync between Microsoft Outlook

and G Suite

and G Suite• Read the Frequently Asked Questions

• Tools for administrators deploying G Suite Sync

• How to get help

Thanks for using G Suite Sync,

The G Suite Sync Team"

These suggestions are for "traditional" AV software. Since Webroot SecureAnywhere functions differently, exclusions like these are only needed in rare cases.@ wrote:

Here is Google's "strong" advice re. installation of its G Suite syncing software, to permit MS Outlook users to sync their pst files with G Suite email, etc.:

"Dear G Suite Sync user,

Thanks for installing G Suite Sync for Microsoft Outlook. This software will synchronize your calendar, contacts, email, notes, tasks and domain's global address list with G Suite. Before you get started, there are a few things you should know about the current version of G Suite:

• Your journal entries will not synchronize with G Suite.

• G Suite Sync will initially download up to 1GB of email from the G Suite Server to your desktop. You can change this setting from the system tray menu. (learn more).

• Your initial sync can take a long time, because there's a lot of email to download. To see your synchronization status, look at synchronization status in your system tray.

• We strongly recommend that you create an exclude rule in your antivirus software so that it does not scan any files located under %LOCALAPPDATA%GoogleGoogle Apps Sync.

For more information, go here:

• G Suite Sync User Manual

• What's different and won't sync between Microsoft Outlookand G Suite

• Read the Frequently Asked Questions

• Tools for administrators deploying G Suite Sync

• How to get help

Thanks for using G Suite Sync,

The G Suite Sync Team"

-Dan

As a coder, you should be familiar with stream (including file) open commands. You pick the file, you pick the mode, you get a handle.

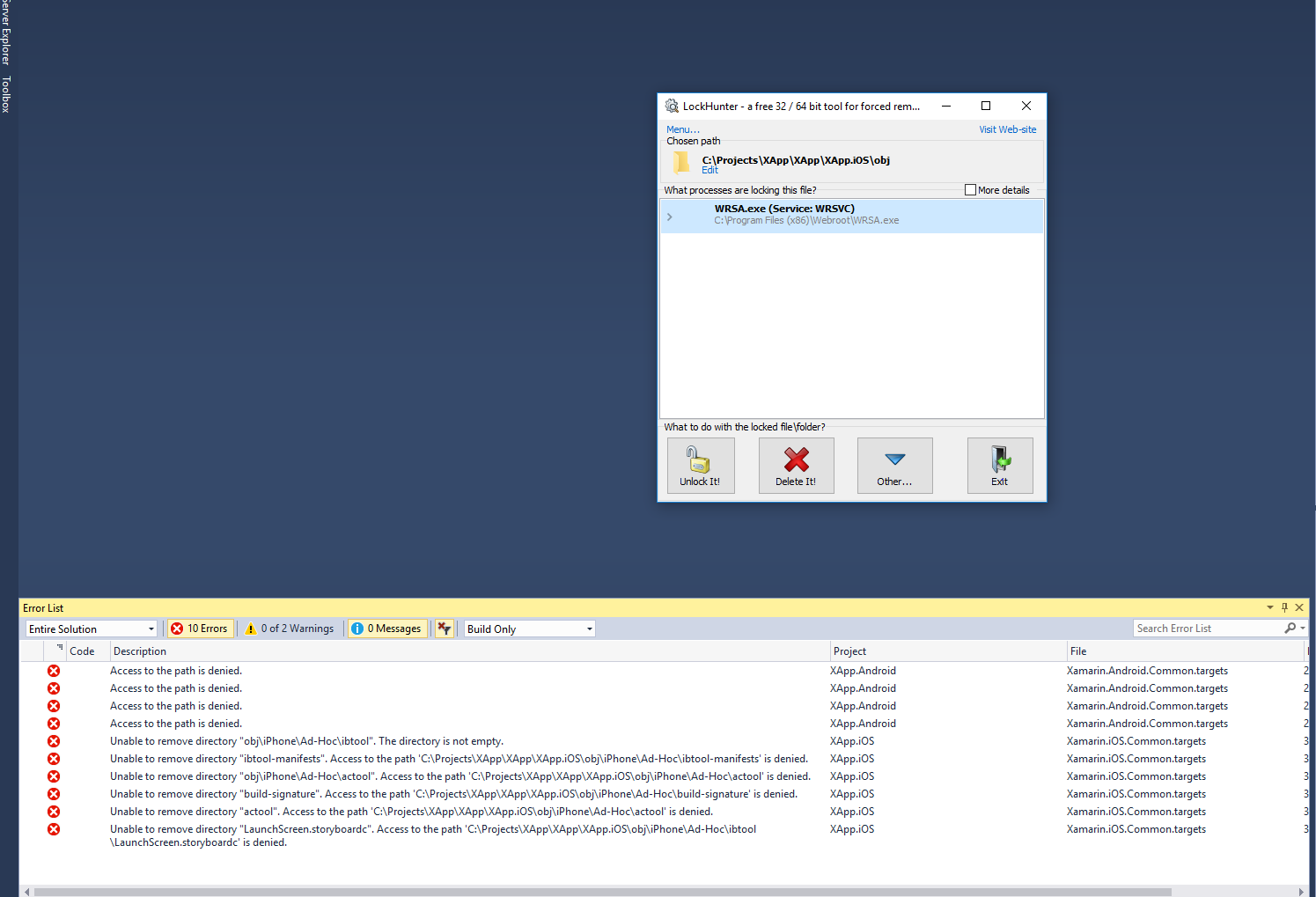

So why am I geting locked files that are causing compliation to fail? Lockhunter reports WRSA holding the locks.

So why am I geting locked files that are causing compliation to fail? Lockhunter reports WRSA holding the locks.

Please Submit a Support Ticket for this case. Shutting down WSA when compiling may be a temporary solution, though obviously not ideal.@ wrote:

As a coder, you should be familiar with stream (including file) open commands. You pick the file, you pick the mode, you get a handle.

So why am I geting locked files that are causing compliation to fail? Lockhunter reports WRSA holding the locks.

-Dan

Hey, Dan, how did this get anything to lock? Last time I watched Webroot with ProcMon, it never did any exclusive opens or locks until it found something it explicitly didn't like (Full Positive). Did the code base change that dramatically?

Reply

Login to the community

No account yet? Create an account

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.