Hi I have a technical question about Webroot Endpoint memory usage.

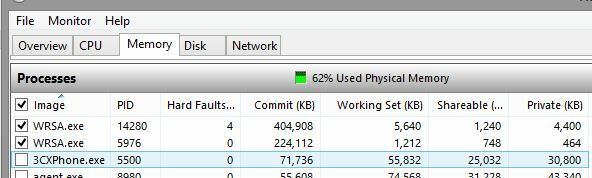

I've found Webroot commits around 600mb of memory when it only uses about 7mb.

We have customers asking about it, as this as that's a massive amount of memory for a program to reserve?

You can see it for yourself in Resource Monitor under memory and commit.

Why does Webroot reserve so much and use so little?

Thanks in advance!

Answer

Commit memory very high!

Best answer by TechToc

That is true, however, because Commit memory is flexible in its usage, it can also go down allowing other applications to make use of it if necessary.

I can understand your point, however, Commit memory isn't really written to disk either. It's really a kind of virtual marker in the event that a process needs it. The commit size is written to memory, but the amount of that memory buffer isn't written until it's needed. There are tools out there that you can force it to write to disk, but generally, it's just marked, but doesn't actually use any Disk I/O until needed.

Windows in most cases is able to address this kind of elastic memory fluctuations because the page file isn't a static size. So while you can 'hard set' a page file size so that it isn't able to grow in size, the default options set at install allow for some dynamic resizing if necessary.

On the Data center side, there are a number of different functions built into a server that limit this kind of paging and optimizations that would cause this normal kind of behavior from getting out of hand like you could see. Additional RAM, faster disks, and HUGE pages file already allocated means that a process that is simply marking a reserve of memory (all of which are designed to eliminate the exact issue you mention as it applies to all software) means that the worst case scenario is very unlikely. I have a process running with 1.5 GB of Commit memory at this moment with very little impact on any normal functions of the system. I also haven't rebooted my machine in almost two weeks. Additionally, while it can certainly seem as if the commit size is increasing, it's unlikely to increase exponentially unless WSA begins journaling a process, at which point, classification of that file, or a console override would address this journaling.

I can understand your point, however, Commit memory isn't really written to disk either. It's really a kind of virtual marker in the event that a process needs it. The commit size is written to memory, but the amount of that memory buffer isn't written until it's needed. There are tools out there that you can force it to write to disk, but generally, it's just marked, but doesn't actually use any Disk I/O until needed.

Windows in most cases is able to address this kind of elastic memory fluctuations because the page file isn't a static size. So while you can 'hard set' a page file size so that it isn't able to grow in size, the default options set at install allow for some dynamic resizing if necessary.

On the Data center side, there are a number of different functions built into a server that limit this kind of paging and optimizations that would cause this normal kind of behavior from getting out of hand like you could see. Additional RAM, faster disks, and HUGE pages file already allocated means that a process that is simply marking a reserve of memory (all of which are designed to eliminate the exact issue you mention as it applies to all software) means that the worst case scenario is very unlikely. I have a process running with 1.5 GB of Commit memory at this moment with very little impact on any normal functions of the system. I also haven't rebooted my machine in almost two weeks. Additionally, while it can certainly seem as if the commit size is increasing, it's unlikely to increase exponentially unless WSA begins journaling a process, at which point, classification of that file, or a console override would address this journaling.

Login to the community

No account yet? Create an account

Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.